The All-Pairs Shortest Path problem addresses the fact that previously we only computed the shortest paths from a start vertex to all others.

The naive way to do this would be to run our known algorithms times, once for each possible source vertex:

| Cost Function | Algorithm | Runtime |

|---|---|---|

| BFS repeated | ||

| Dijkstra’s repeated | ||

| Bellman-Ford repeated | ||

| Note that if we have for a fully connected graph, BF gives . |

Floyd-Warshall

Restrictions: No negative Cycles Runtime:

Floyd-Warshall utilises dynamic programming to calculate the shortest paths more effectively. Once the DP-Table has been computed, we can query it for efficient lookups.

Recurrence

Consider a graph with vertices . Our subproblem is: ” shortest path from to passing only vertices with index “. (This gives us a 3D DP table.)

There are three options in the recurrence here:

- The path uses the vertex as it’s shorter:

- The path uses vertex exactly once:

- The path uses more often (only worth it if is in a negative cycle thus we ignore it)

Our base case is :

- If :

- If :

- If :

- Otherwise

Implementation (Bottom-Up)

We can optimise the recurrence not to store the values in a 3D, but a 2D array only, by keeping only the last values.

The best way to store the edges and their costs is in a Adjacency Matrix, as this allows the fastest lookup, which is the only needed operation here.

def FloydWarshall(V, E, c):

n = len(V)

d = [[float('inf')] * n for _ in range(n)] # Initialize distances to infinity

# Base Cases: Distance to self is 0, direct edge costs

for u in V:

d[u-1][u-1] = 0

for v in V:

if (u, v) in E:

d[u-1][v-1] = c((u, v))

# Main Dynamic Programming Loop

for k in range(1, n + 1): # Intermediate vertices allowed (1 to n)

for i in range(1, n + 1): # Source vertex

for j in range(1, n + 1): # Destination vertex

d[i-1][j-1] = min(d[i-1][j-1], d[i-1][k-1] + d[k-1][j-1])

return dImportant: Use a value like 10000 instead of Integer.MAX_VALUE in Java, as you get overflows otherwise.

We can also read of the shortest paths by keeping a pred array in which we store the vertex k that lead to the update of the value. Then we can recursively reconstruct the path.

Runtime

We can read off the runtime from the 3 for loops very easily. Floyd-Warshall runs in .

Negative Cycles

Floyd-Warshall detects negative cycles in a similar way to Bellman-Ford.

Negative Cycles with Floyd-Warshall

There exists a negative cycle

In words: If there exists a path from a vertex to itself with negative weight (passing through any other vertex, i.e. after the th iteration of the outer loop), then there exists a negative cycle that contains this vertex.

We can thus check for the existence of a negative cycle by running the following check:

# Negative Cycle Detection

for v in V:

if d[v-1][v-1] < 0:

return "Negative Cycle Detected"Proof: (by contradiction)

- Assume a negative cycle exists

- Decompose it into a path from start-vertex to and back, where has the highest index all vertices in the cycle. This gives and .

- We can now use the subproblems (true by optimality principle of DP):

- Thus . But because , there will be one diagonal entry .

Note: If there exists a negative cycle, but it’s not reachable from and any vertex in the cycle doesn’t reach , we can ignore it and the distance will still be correct.

Johnson’s Algorithm

Runtime: (exactly as fast as times Dijkstra’s, but runs on negatives) Requirements: Negative edges allowed, no negative cycles

Johnson’s increases the weight of all edges to in order to allow Dijkstra’s to run on the graph. It does this by assigning a height to each vertex. The new cost is then .

This means that for a path the cost the costs cancel out in pairs: gives . This is called a telescoping sum.

Naive Approach

Why adding a constant to each edge (equal to the lowest negative edge as to make it 0) doesn’t work: A longer path (more edges) would get increased in cost more than a shorter. This is not what we want, we want the ordering to stay the same. Thus we need the cost to only depend on the start- and end-vertex (not on which path was taken).

How to determine the heights

We need the heights to be chosen such that the edge weights are all which is a seemingly hard problem. Note that the system has no solution if there are negative weight cycles. These will also be reported during the B-F run and then we can abort computation.

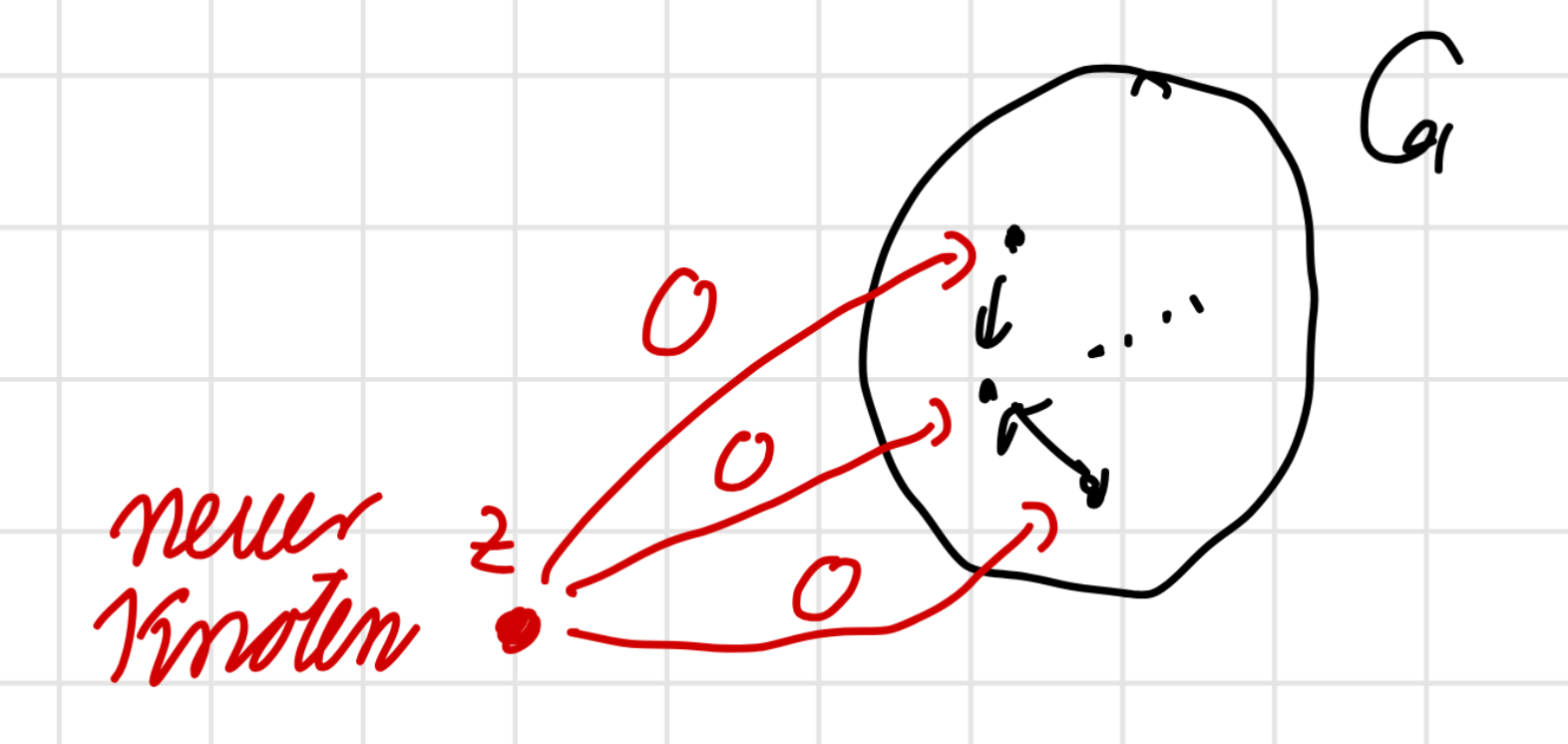

The solution is to add a new vertex which has a directed edge of cost 0 to all vertices in the graph:

We then run B-F on the graph starting from and the height of each vertex is equal to the . We know by the triangle inequality and the definition of shortest path: which then gives us by rearranging, which is exactly what we want. We can now run Dijkstra’s.

| Step | Runtime | Description |

|---|---|---|

| 1. Augment Graph | Add a new vertex and connect it to all existing vertices with zero-weight edges. | |

| 2. Compute Heights | Run Bellman-Ford from z to calculate the height function (shortest distance from to ). Detect negative cycles. If a negative cycle is found, report it and terminate. | |

| 3. Reweight Edges | Compute reweighted edge costs for all edges . | |

| 4. Run Dijkstra’s | Run Dijkstra’s algorithm from each vertex in the reweighted graph to compute all-pairs shortest paths. | |

| 5. Undo Edge-Weights | Convert the distances back to the original weights using: |

The runtime is dominated by step 2. and 4., but as , we get just Dijkstra’s runtime.

This may sound surprising, but the higher overall cost allows us to run pre-computation steps for “free”.

When to use F-W, when Johnson’s

Dense Graphs: (, fully connected for example). Floyd-Warshall is more efficient here as the of Johnson’s is actually and thus more expensive.

Sparse Graphs: (Trees for example) Here Johnson’s shines.