We can model a number of graph problems using matrices. This then allows us to use techniques from linear algebra (Strassen’s, iterative squaring) to speed up computation.

Problems in Matrix version

Existence of Walks

We can first reformulate this as a DP problem. iff. there exists a walk of length from to . Then we have the following recurrence:

Minimal Cost of a Walk

The minimal cost can be formulated in a very similar way, by taking the minimum of the shortest walk with edges and all possible with an edge to and the cost to get to them.

Number of Walks

We want to count the number of walks to reach a vertex . We let number of walks from to . Recurrence: which is exactly a matrix multiplication. Note that if the product will be and as there is no path through , this is exactly what we want.

Let denote the adjacency matrix for graph . Then .

Counting Triangle Walks

We can use this trick to count the number of triangle walks in a graph (walks from to to to (not using in between).

As a triangle walk is just a walk from with edges, we can use the matrix . We can check the number of triangle walks from vertex by checking . Then we sum over all of these ‘s.

This is exactly the trace of , thus: note we divide by three as otherwise we’d count the same walk thrice for each intermediate vertex.

Reachability (Transitive Closure)

In an undirected graph, it’s easy to check for reachability: we just use BFS or DFS to find the connected component.

In a directed graph the problem is slightly more involved. Instead of counting the number of walks, we now go back to the problem of checking if any exists.

But we don’t want to have to calculate in order to check if there exists a path of length , but only check the final .

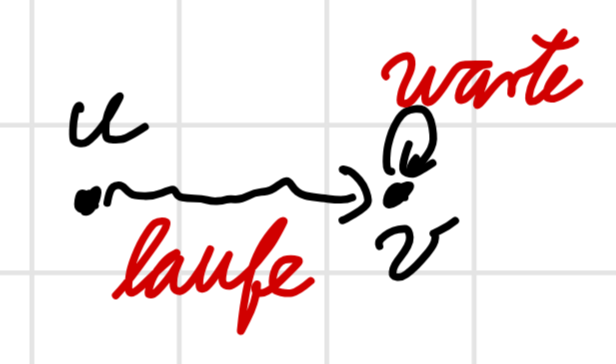

We can do this by adding a self-loop () for each vertex. Then any walk can just “wait” on the last vertex.

Then we can use iterative squaring to find the final matrix instead of having to check every single one.

Optimisations

There are a few ways to speed up naive matrix multiplication from .

Iterative Squaring

Iterative Squaring relies on the fact that which means we only need to compute once and get using one more multiplication (instead of for a total of 4).

Algorithm:

- Initialize:

result = I(identity matrix),temp = A,k' = k - Iterate: While

k' > 0:- If

k'is odd:result = result * temp(using appropriate multiplication - arithmetic or boolean),k' = k' - 1 temp = temp * tempk' = k' / 2

- If

- Return:

result

Strassen’s

Strassen’s algorithm is basically Karatsuba’s but for matrices. The runtime is given by: where the factor is the combining of final results for each entry.

This is slightly faster than at , as we can verify using the Master Theorem.