1.1 Vectors and linear combinations

The subtraction of one vector from , is the vector going from the tip of to the tip of .

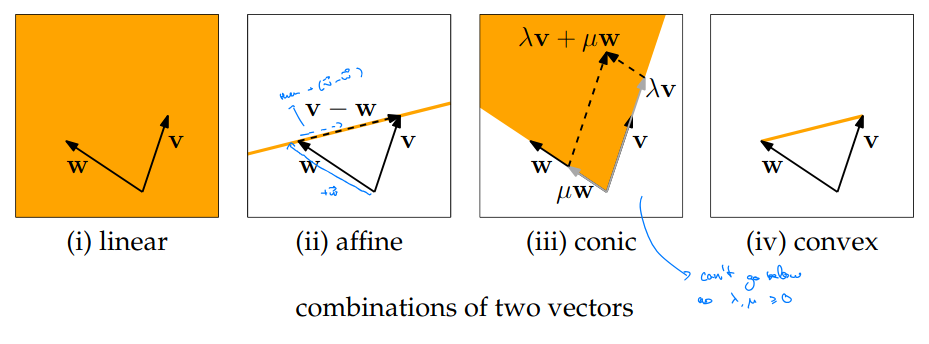

1.1.4 Affine, conic and convex combinations

1.7 Affine, conic, convex combination

A linear combination of vectors is called

- an affine combination if

- a conic combination if for

- a convex combination if it is both affine and conic

1.2 Scalar products, lengths and angles

1.2.1 Scalar Product

The scalar product is defined as:

1.9 Scalar Product Properties

be vectors and a scalar:

- (symmetry / commutatitivity)

- (scalars move freely)

- and (distributivity)

- with equality exactly if (positive definiteness)

The scalar product measures “how much the vectors point in the same direction”. It projects the length of one onto the other and then multiplies those lengths. The more they are aligned, the longer this projection will be.

Note that if and are orthogonal, we have which holds because: and if . From this definition we can also see that is orthogonal to all vectors.

1.2.2 Euclidean Norm

1.11 Euclidean norm

The euclidean norm of is the number

This means that .

Unit vector

A unit vector is a vector such that .

We can produce unit vectors by dividing by the vector’s norm: .

1.2.3 Cauchy-Schwarz Inequality

Cauchy-Schwarz Inequality

It holds exactly if and only if (scalar multiple of each other).

1.2.4 Angles

Angle between vectors

1.15 Orthogonal Vectors

Two vectors are orthogonal if . In other words if the cosine of the angle between them is and the angle thus degrees.

As the scalar product of with any vector is , all vectors are orthogonal to .

1.15 Hyperplane through the origin

A hyperplane is defined as the set of all orthogonal vectors to a .

Hyperplane dimension

A hyperplane through the origin of has dimension (Assignment 6).

1.2.5 Triangle Inequality

Triangle Inequality

It holds exactly if .

1.2.6 Covectors and

The scalar product can also be written as . The transpose is a covector, written as a row vector instead of a column vector.

Formally a covector is a function .

1.3 Linear (in)dependence

Introduction

If such that then and are colinear. This means that they are on the same line.

Two vectors in cannot span the whole space, but they automatically form a plane (except if they are colinear).

To check if three vectors are coplanar, you have to check if such that .

1.3.1 Definitions and Examples

Dependance:

- At least one vector in the set is a linear combination of the other ones.

- is a non-trivial combination of the vectors in the set (this means that there is a linear combination in which at least one ).

- At least one of the vectors is a linear combination of the previous ones.

Independance:

- None of the vectors is a linear combination of the other ones.

- There are no besides all such that the linear combination sums to (i.e. there is only the trivial combination).

- None of the vectors is a linear combination of the previous ones.

IMPORTANT

For each , there is a unique set of such that A linear combination of linearly independent vectors can only be written in one way.

Edge Cases:

- Any sequence of vectors which contains the vector is linearly dependant by definition. Set all other ‘s to 0 and the scalar factor of the zero vector to .

- Any sequence of vectors which contains a vector twice is dependent, since you can set their ‘s to be opposite.

- The empty set is always linearly independent (since there is no vector to compare).

1.3.3 Span of Vectors

1.25 Span

The span of a set of vectors is the set of all linear combinations:

Example: in there are three possible cases:

- a line through the origin

- a plane

- the entire space

Zero always in span

for any vectors, even if it’s the empty set ().

1.26 Linear combination doesn't change the span

Adding or removing an element from the set doesn’t change the span if the element is a linear combination of the other vectors.

1.28 Span the space

The span of linearly independent vectors is .