2.1 Matrices and linear combinations

2.2 Matrix Properties

- The addition of two matrices happens element-wise.

- The scalar multiplication of a matrix is also element-wise.

- The zero matrix is the matrix in which every element is 0.

- If m (rows) = n (columns) the matrix is square.

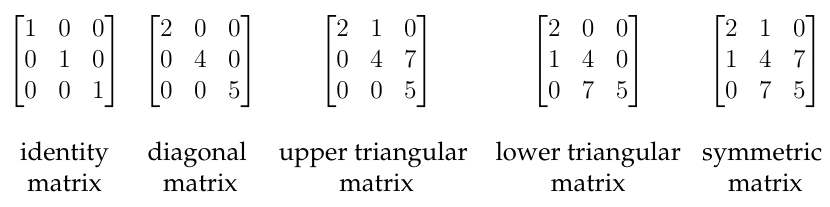

Here are a few special matrix forms:

2.3 Kronecker delta

The Kronecker delta is a function taking in and outputting if else . It’s used to define the Identity matrix.

2.3 Identity Matrix

The identity Matrix is defined as

Extra: Nilpotency

Nilpotent

A matrix is called nilpotent if there exists a such that .

Such a matrix does not have an inverse.

2.1.1 Matrix-Vector multiplication

is the multiplication of Matrix with the vector

- The result is a vector with rows: each row is the scalar product of row with .

- The resulting vector is the sum of all columns → this is the definition of the linear combination. The matrix-scalar multiplication is thus basically a linear combination.

2.5 Linear combinations and independence

- A vector is a linear combination of the columns of if and only if there exists a vector (of suitable scalars) such that

- The columns of are linearly independent if and only if is the only vector such that .

2.7 Identity Matrix

The Identity matrix times a vector gives back that exact vector:

Note that matrix-vector multiplication can be viewed from two perspectives:

- Column View: When doing , contains the coefficients by which we multiply each of the columns of , giving us a linear combination.

- Row View: Each entry of in is the linear combination of a row of and :

2.1.2 Column space and rank

2.9 Column space

The column space of a matrix of is the span (set of all linear combinations) of the columns (interpreted as vectors):

Note that in the same way as is always in the span of any set of vectors, is always part of the column space.

2.10 Rank

The rank of a matrix is a number between and which counts the number of independent columns. A column is independent if it is not the linear combination of the previous ones (or the next ones, if you do it the other way round).

0 Rank

The rank is exactly if is the zero matrix.

Note that we can reorder the columns of a matrix and thus get different independent columns, but the rank will stay the same.

2.11 Independent Columns span the column space

The independent columns of span the column space of .

This can be proven by lemma 1.26 (adding elements that are a linear combination of the other ones, doesn’t change the span).

2.1.3 Row space and transpose

We can also define the row-space as the space spanned by the rows and the row-rank as the number of independent rows.

Row-rank = Column-Rank

The rank of the rows and the columns is always the same!

2.12 Tranpose

The transpose of is the matrix where .

2.13 Transposing twice

For all matrices :

2.13 Symmetric

A matrix that is square is symmetric if and only if .

2.14 Row space

2.1.5 Nullspace

2.17 Nullspace

Let be an matrix. The nullspace of is the set

Note that the vector is always in .

2.2 Matrices and linear transformations

2.18 Matrix Transformation

is the function where and defined by:

2.19 Linearity Axioms

Let be an matrix, and . Then

Because we can express every vector as the linear combination of the unit vectors , we can reconstruct what happens to every single vector by understanding what happens to the unit vectors (thanks to linearity).

2.2.2 Linear Transformations and linear functionals

2.21 Linear Transformation

A function / is called a linear transformation / linear functional if the following linearity axiom holds for all and all :

By this definition, we can deduce that the transformation of a linear combination is the linear combination of the transformed vectors

Linear is matrix

Every matrix transformation is a linear transformation.

From definition 2.21 follow the two linearity axioms “light”:

- use these to prove that something is a linear transformation as it’s easier to check them in isolation.

2.24 0 maps to 0

For every linear transformation / linear functional we have: $$ T(0) = 0 $$$00$!

2.2.3 The matrix of a linear transformation

2.26 Every linear transformation is uniquely described by a matrix

Let be a linear transformation. There is a unique matrix such that meaning that for all .

We can find this matrix using the formula

Note that for two matrices and , .

2.2.4 Kernel and Image

The kernel is the nullspace of a linear transformation. The image is the column space of the linear transformation.

Note that for linear transformation and the unique matrix representing it, we have and .

2.3 Matrix Multiplication

2.40 Transpose of multiplication

for two matrices with corresponding dimensions.

Note that and for all matrices .

2.42 Matrix Multiplication Properties

- and (distributivity)

- (associativity)

Note that matrix multiplication is not commutative.

2.3.4 Everything is Matrix-Matrix

You can see all types of vector-matrix, matrix-vector, vector-vector, scalar product multiplication as a matrix-matrix multiplication.

We can use associativity to our advantage: If we have a product that has very big intermediate matrices, we can use associativity to simplify it: this means that .

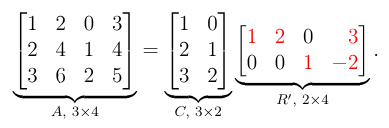

2.3.5 CR Decomposition

CR decomposition

A matrix of rank can be written as the matrix-matrix product where is the submatrix containing the independent columns and the unique matrix.

You can see why this works intuitively. The result of the multiplication is a matrix in . Each column of represents the number of times we take each independent column to get the dependent ones.

You can see that in the columns of the independent vectors, is such that the linear combination only contains the vector itself, once. The dependant columns are a combination of the independent ones (see column 2 being 2 times column 1, thus having (2, 0) as it’s second column.

Note that the C in CR’ decomposition does not need to be a column vector, it can have any width, depending on how many independent columns A has.

Note that by Lemma 1.24 is unique as there is only one way to write a vector as the linear combination of independent ones.

We can also use CR decomposition to compress a matrix, as the decomposition uses only entries.

2.4 Invertible and Inverse Matrices

By applying the inverse matrix, you can undo any matrix transformations, as long as this matrix exists.

If the matrix in is not square, the transformation is not bijective and thus not reversible.

Wide Matrix transformations are not injective

Let be a matrix transformation and . Then is not injective. This holds because a transformation from more dimensions into less loses information, so two inputs map to the same output (by the pigeonhole principle).

Tall Matrix transformations are not injective

Let be a matrix transformation and . Then is not surjective. This holds because a transformation from less dimensions into more doesn’t map to all outputs, so you can’t reverse all of them.

Now let’s look at inversible, square-matrix linear transformations.

The inverse of a bijective linear transformation is also a linear transformation

Let be a bijective linear matrix transformation. Then is also a linear transformation.

There are three equivalent statements about matrix transformations:

2.53 Equivalences

- (i) is bijective.

- (ii) There is an matrix B such that .

- (iii) The columns of are linearly independant.

The third one can be derived from the fact that if , there is only a single such that . It is also intuitively clear that if not all columns where linearly independent, we’d actually have a tall linear transformation and would be losing information.

Invertible and singular matrix

Let . is invertible if there is a matrix such that (only works if has linearly independent columns). Otherwise, is called singular.

is invertible iff (the following equivalent conditions are true):

2.57 Inverse Matrix

The inverse matrix is unique and can be denoted .

2.58 Inverse of the Inverse

2.59 Inverse of Multiplication

, invertible matrices . Then is also invertible and

2.6 Inverse of transpose

Let be an invertible matrix. Then the transpose is also invertible and: