6.1 Least Squares Approximation

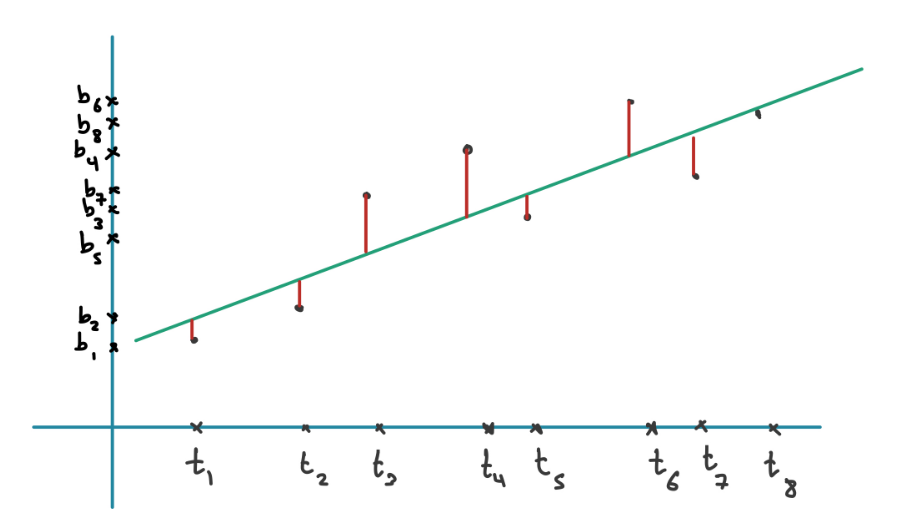

One case in which usually has no solutions is when trying to fit a line to a set of measurements or datapoints. We can then try to use a least squares approximation to find a best-fitting line.

6.1.1 Minimiser is solution to the normal equations

A minimiser of is also a solution of . When has independent columns the unique minimiser of is given by

The line here can be represented by the equation . We want to find and that minimise the sum of squares of the error of the fitted line: which in Matrix vector notation gives where and

6.1.2 Independent columns of

The columns of the matrix defined before are linearly dependent if and only if for all .

As all datapoints are unique in time (can’t have two points at one time) this always holds.

6.1.3

If the columns of are pairwise orthogonal, we get a diagonal matrix which is very easy to invert. We can convert any to have orthogonal columns by making sure that the sum of all the , which can be achieved by shifting the graph on the x-axis.

We can also try to fit any other equation, not just a line here. A parabola could be used by adding a third column to which contains and adding .

6.2 The set of all solutions to a system of linear equations

We know that , there exist and such that and as are orthogonal complements.

6.2.1 Unique solutions

Let . Let . We have that

Proof This is because have unique decompositions into the two fundamental subspaces. and from this follows that .

6.2.2 Unique solution to a system

Suppose that . Then where is unique such that .

This means that if there’s more than one solution to the system (i.e. the nullspace is not ), then the set of all solutions is a specific solution + the entire nullspace.

6.2.3 Unique in

Suppose that . Then there exists a unique vector such that

The empty solution

We now only need to analyse the case where the given system of linear equations has no solutions: .

This is difficult as proving a negative in this case means exhausting the entire search space (which is infinite) or proving it by using something smarter.

We can issue a “certificate” which proves that a system has no solution.

6.2.4 Proving no solutions

Proof:

- Verify that is impossible:

- If and then

- We now want to show that if :

- (there are no solutions) (there is an error,

- Thus and

- but we can rewrite and thus

- we know the first term is as as they are in orthogonal subspaces

- the second term is as it’s .

- Thus

- (there are no solutions) (there is an error,

Example:

- The system is then $$ D = { z \in \mathbb{R}^2 \ | \ z_1 + 2z_2 = 0, 2z_1 + 4z_2 = 0, -z_1 - 2z_2 = 0, z_1 = 1 } $$$P = \emptysetD \neq \emptysetz = (1, -\frac{1}{2})^\top \in D$.

Applications:

- If our matrix had linearly independent rows, then there would be solutions for all points in . Since the rows are linearly independent, the only solution to is . Hence . Thus there is always a solution for the .

- We can also show that a vector is linearly independent from a set of vectors . We just put them into the matrix equation . If there is no solution, is independent. But to show the system has no solutions, we need our new formula.