The determinant of a matrix can be understood as the volume of the unit-cube after transformation. It can be stretched, squished or be reduced to a point (non-invertible matrix).

(Informally) The signed volume of this parallelepiped gives us the determinant.

7.1 Definition from Properties

Properties of the determinant

- if linearly dependent columns.

- Exchanging two rows flips the sign of the determinant.

- Subtracting two rows does not change the . (we can use G-J to simply calculations…)

Multilinearity

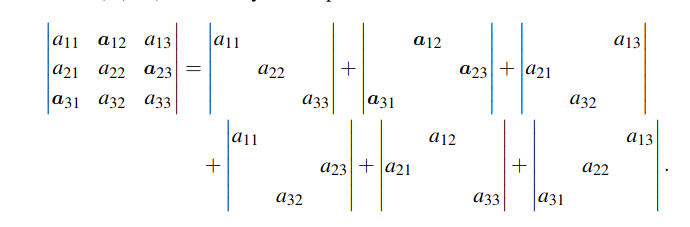

The determinant preserves “multilinearity”. This means that changing only a single row will preserve the rest of the determinant (it’s linear for each row): 6. 7. 8.

Example: For a matrix, fixing the second column: Which gives us .

Important: Multilinearity does not mean . Instead: because scaling affects all columns simultaneously.

Determinant of Upper-Triangular

The of upper (or lower) triangular is .

Using Gauss-Jordan to simply calculations

Because the determinant of an Upper- (or Lower)-Triangular matrix is the product of the pivots, we can often use G-J-Elimination to make computation easier.

We cannot exchange rows (without keeping track of the sign), nor can we multiply rows (without multiplying the determinant by that same factor) in the end.

7.2 General Case (def using Permutations)

7.2.1 Sign of a Permutation

Given a permutation of elements, it’s sign can be -1 or 1. The sign counts the parity of the number of elements that are out of order (inversions) after applying the permutation.

The permutation , defined as , , , , we have the pairs for . For all these pairs , except for which gives . Thus .

This can also be counted as the parity of the number of row swaps necessary to get back to the identity.

Properties of the sign

- The sign of a permutation is multiplicative: .

- For all , exactly half of the permutations have sign and the rest have sign .

7.2.3 Determinant formula

Given a square matrix , the determinant is defined as: where is the set of all permutations of elements (of which there are ).

Examples In a matrix, there are permutations, the identity and the inversion. From this we can get the formula for matrices.

Note that this is the multilinear decomposition These are the terms we get in the sum for according to the above formula. Each of them is one of the permutations applied to the matrix.

We then want to find the sign of the permutation: number of row exchanges to get back to a diagonal.

Note that this is the multilinear decomposition These are the terms we get in the sum for according to the above formula. Each of them is one of the permutations applied to the matrix.

We then want to find the sign of the permutation: number of row exchanges to get back to a diagonal.

7.2.4 Properties of the Determinant

- Given a permutation matrix corresponding to a permutation , then (this is as is also an orthogonal matrix, see 3.). We sometimes write .

- Given a triangular (either upper or lower) matrix , we have in particular, .

- If is an orthogonal matrix then This makes sense as there is no scaling in an orthogonal matrix.

Note that for a triangular matrix, if one of the diagonal entries is zero, the determinant is also 0 (as it’s in the product). This matches what we observed before.

7.2.5 Determinant of the Transpose

Given a matrix , then

Proof Idea: This follows from the fact that the inverse of a permutation has the same sign, and transposing is the same as doing the inverse permutation.

7.2.6 Determinant Properties 2

- A matrix is invertible if and only if

- Given matrices , we have .

- Given a matrix such that then is invertible and