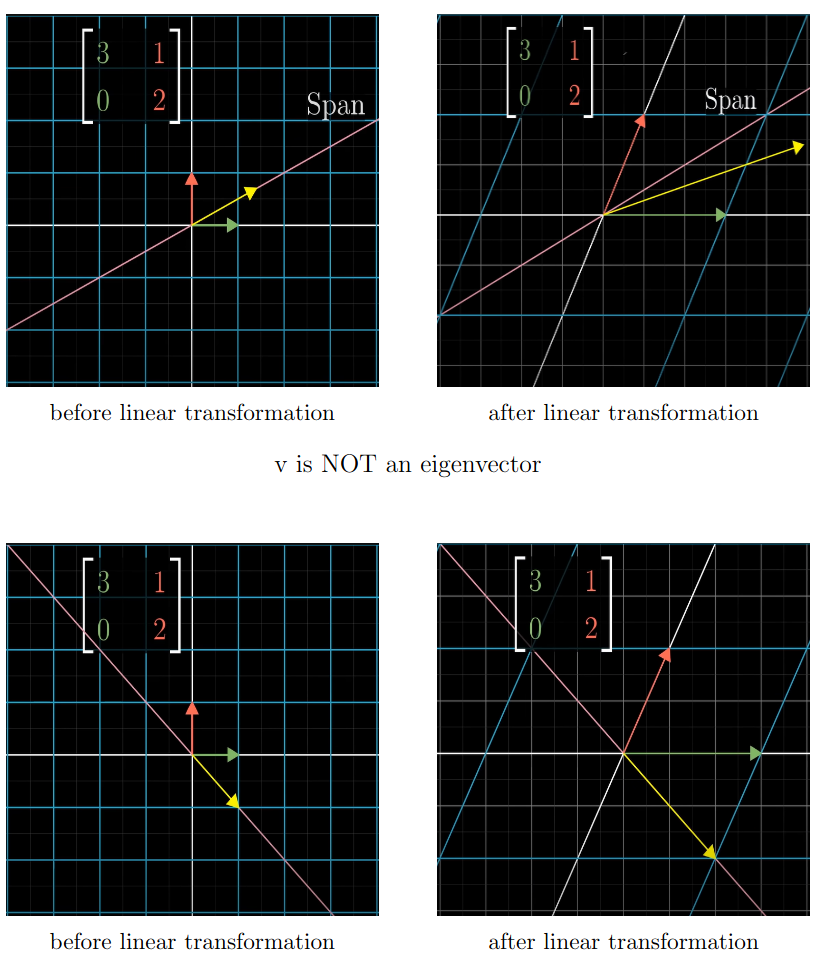

An eigenvalue, eigenvector pair is a scalar and vector such that . This means that the only transformation that applies to this vector is a scaling (or flipping by ) (no turning, shifting, etc…).

For each eigenvalue, there are many eigenvectors (all colinear vectors).

If a matrix has the eigenvalue , it means it collapses a set of vectors to , meaning that the and thus is not invertible. In the other side, if is not an EW of , then is invertible.

8.1 Complex Numbers

Because Eigenvalues can be complex, we need some complex number manipulations.

We define such that in the complex numbers. This can be thought of as an extension field of (see DiskMath).

The operations in this field basically correspond to the polynomial operations we have seen, with being the “variable”:

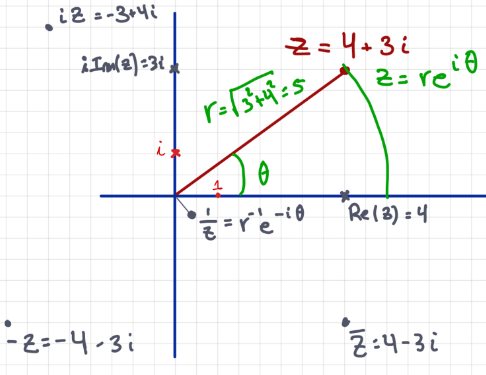

Given with we have the following notation

- (the real part)

- (the imaginary part)

- (the modulus, basically the length)

- (the complex conjugate of )

There are some shortcuts

- (commutative)

We can also define polar coordinates here which means (Euler’s Formula).

we can then express any as where is the modulus and (restricted to ) is an angle, the argument of .

8.1.2 Fundamental Theorem of Algebra

Any degree non-constant polynomial with has a (existance) zero: such that .

8.1.3 zeros

Any degree non constant () polynomial has zeros: , perhaps with repetitions such that The number of times appears in this expansion is called the algebraic multiplicity of the zero.

Algebraic Multiplicity

The algebraic multiplicity of a root is the number of times it appears in the factorisation.

Example: If the algebraic multiplicity of is then .

We define one more new operation: (the hermitian transpose). Which is conjugating each entry element-wise and then transposing. Note that .

We have . Note that for we have where thus at the end we get . This is because where that absolute value is the modulo for a complex number.

Tricks with Complex Numbers

Prove some is actually in :

- Show that

Tricks 2

If you have some expression we can separate this into:

because of the .

8.1.999 (Example for Fibonacci from the Script)

We can express the Fibonacci formula as a matrix equation , where .

Eigendecomposition: The eigenvalues (golden ratio ) and are found, along with their eigenvectors and . These eigenvectors are independent since and thus they form a basis for .

Basis expansion: The initial state is written as a linear combination of eigenvectors with coefficients : .

Power computation: Since , we get the closed form:

Worked Example: Tribonacci Numbers

Recurrence: , with , , .

Step 1: Companion matrix:

Step 2: Characteristic polynomial:

This yields one real root (the “tribonacci constant” ) and two complex conjugate roots.

Follow the same procedure as before to get the closed form:

8.2 Introduction to Eigenvalues and Eigenvectors

8.2.1 Eigenvalue, Eigenvector pair

Given we say is an eigenvalue of and is an eigenvector of associated with the eigenvalue when the following holds:

We call these an eigenvalue, eigenvector pair. If then we call a real eigenvalue. And the associated pair a real eigenvalue, eigenvector pair.

Note the zero vector was excluded. It is trivially always an eigenvector, thus we discard it.

How to Calculate EW, EV

We have and EW, EV pair and thus . \begin{align*} Av &= \lambda v \\ Av - \lambda v &= 0 \\ (A - \lambda I)v &= 0 \end{align*} This means that if and are an eigenvalue, eigenvector pair, then is a non-zero element of the nullspace of the matrix . Thus the matrix is not invertible . We can use this to calculate the EWs:

- By writing out we get a polynomial in . By solving for it, we get the EWs.

- From the EWs, we solve for all we found before. Note that must hold by definition.

8.2.3 Calculate real eigenvalues

Let . is a real eigenvalue of if and only if . A vector is an eigenvector associated with the eigenvalue if and only if .

Note that a real EV always has a real EW associated with it. All eigenvalues are exactly the roots of the polynomial . All the eigenvectors for are the vectors , , in the nullspace.

8.2.4 Polynomial degree

is a polynomial in of degree . The coefficient of the term is .

8.2.5 Every matrix has an EW

Every matrix has an eigenvalue (perhaps complex-valued).

Example: Eigenvalues of the degree counterclockwise rotation matrix . The solutions to which are and . The eigenvectors are given by . This makes sense because the only vector staying on it’s axis in a 2d rotation of a plane by is the vector pointing straight up, out from the plane.

EWs of an Orthogonal

Let be an orthogonal matrix. If is an eigenvalue of then . The possible values for are then and all conjugate complex values with modulus for example .

This makes sense as orthogonal only turns and doesn’t scale.

Complex EWs

Note that complex eigenvalues are possible even for real-valued matrices, as seen above.

8.2.8 Conjugate Pairs

Let . If is an eigenvalue, eigenvector pair, then is an eigenvalue, eigenvector pair.

8.3 Properties

8.3.1 Properties of EWs, EVs (a)

If and are an EW-EV pair of a matrix , then, for , and are an EW-EV pair of the matrix .

Intuitively, on scales it by . Then scaling that already scaled by again gives us , etc…

8.3.1 Properties (b)

Let be an invertible matrix. If and are an EW-EV pair, then and are an EW-EV pair of the matrix .

Proof: We have thus thus and we get .

8.3.2 Linear independence

Let and be the EVs of . If the s are all distinct then the EVs are all linearly independent.

From this we know that we can write any vector as the linear combination of the eigenvectors, if they span the space.

8.3.3 Basis from Eigenvectors

Let with distinct real eigenvalues (meaning that the zeros of as described in Corollary 8.1.3 are all distinct, algebraic multiplicity ), then there is a basis of made up of EVs of

We also call this the Eigenbasis or a complete set of real EVs, which will come in handy later for Diagonalisation.

8.3.4 Characteristic Polynomial

The polynomial is called the characteristic polynomial of the matrix . The eigenvalues as they show up in the polynomial are not all distinct in general. The number of times an eigenvalue shows up is called the algebraic multiplicity of the eigenvalue.

Note that and have the same root and can both be used to find the eigenvalues. We can transform because .

The characteristic polynomial is always monic, the polynomial has a leading if the degree is odd. Therefore working with the characteristic one is easier.

Note as defined later, the geometric multiplicity is the .

8.3.4 (2)

The trace of is defined as .

8.3.5 Eigenvalues of the transpose

The eigenvalues of are the same ones as those of .

WARNING: The eigenvectors are not the same in general however.

8.3.6 Trace and Determinant

Let and its eigenvalues. Then and

Be careful to include each eigenvalue as often as their algebraic multiplicity in these sums/products. You can use this to double check calculations.

Explanation (1): The eigenvalues describe how much each eigenvector is scaled. Thus by multiplying the scaling of each dimension, we can figure out the volume of the unit cube which is the determinant.

Explanation (2): The Lemma 8.3.6 is quite surprising, since the determinant and trace are where the eigenvalues in general must not be? It holds because complex eigenvalues always show up in pairs: . And because and .

8.3.7 Properties of the trace

and The trace is commutative, which makes sense as addition is element-wise.

Warnings

- and share eigenvalues not eigenvectors

- The eigenvalues of are not the eigenvalues of + those of . They aren’t correlated.

- The eigenvalues of or are not easily computed from those of and .

- Gaussian Elimination does not preserve EWs or EVs. This means the EVs and EWs depend on the representation of the matrix, not on the subspaces they define.

Some extra Notes

Special cases

Real Symmetric matrices always have real eigenvalues. Real Skew-Symmetric matrices always have imaginary (or zero) eigenvalues!

( commute) then they share an EW-EV

Assume .

If an EW-EV pair of then thus is an eigenvector of . Then is a multiple of some of that EW (easiest to see for complete set of real EVs) thus that is also an EV of

Thus both and share an EV: then also has that EV.

Eigenvalues of

For an eigenvalue of , is a real eigenvalue of the matrix . This is easily verified by calculation.

Intuitively this makes sense as by adding were increasing the values on the diagonal, meaning we have to increase the value of by the same amount so it makes again.

Example If we have with eigenvalues then has eigenvalues? We have eigenvalues of by lemma script. Thus are EWs. Then are the eigenvalues of thus are the EWs.

Find Eigenvectors of a

The eigenvectors of an eigenvalue are those and exactly those vectors in , .

Special Case: EW A not invertible

We know the EV are in the because they solve the formula . Thus is in the nullspace of .

If is not invertible, then it will have an EV of , which means that there is an EV solving . Thus is in the nullspace of .

If the EV has algebraic multiplicity of more than the nullspace needs to have more than dimension, otherwise it won’t have a complete set of EVs.